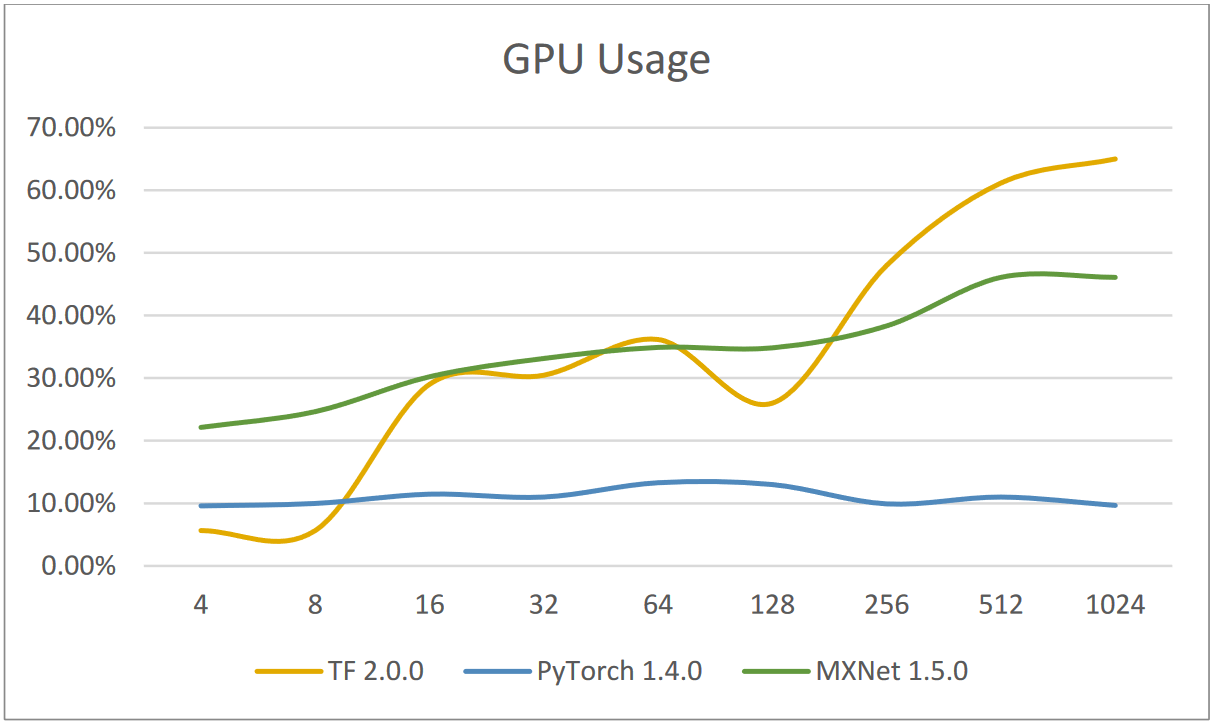

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

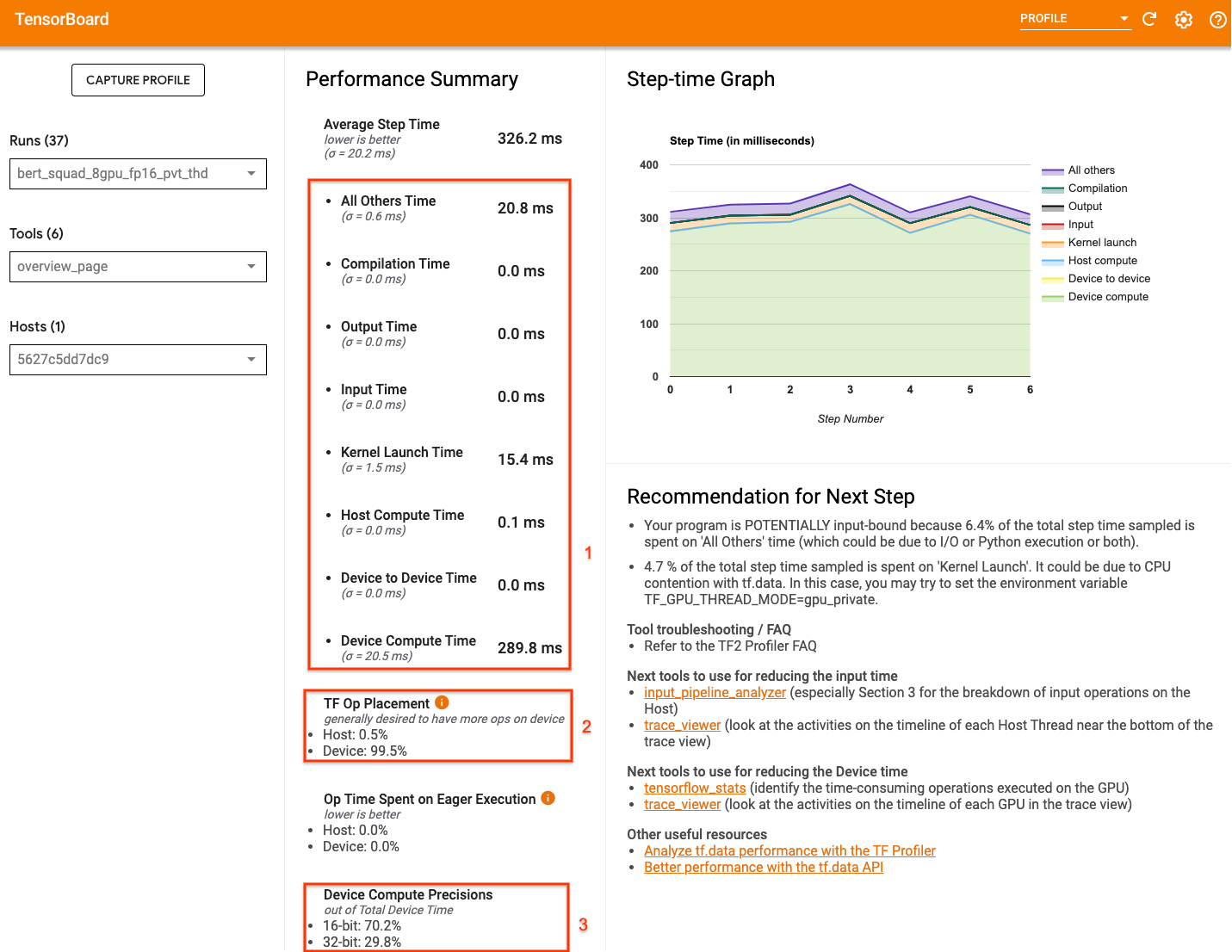

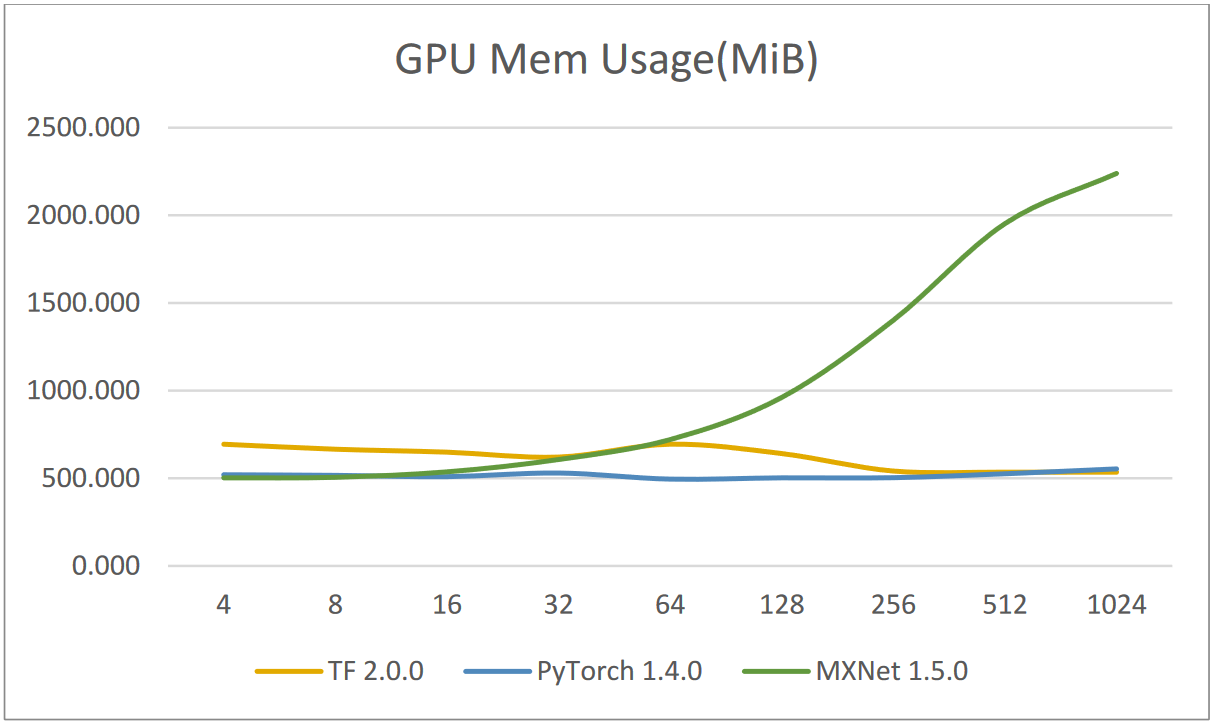

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science

Multi-GPU and distributed training using Horovod in Amazon SageMaker Pipe mode | AWS Machine Learning Blog

Accelerate computer vision training using GPU preprocessing with NVIDIA DALI on Amazon SageMaker | AWS Machine Learning Blog

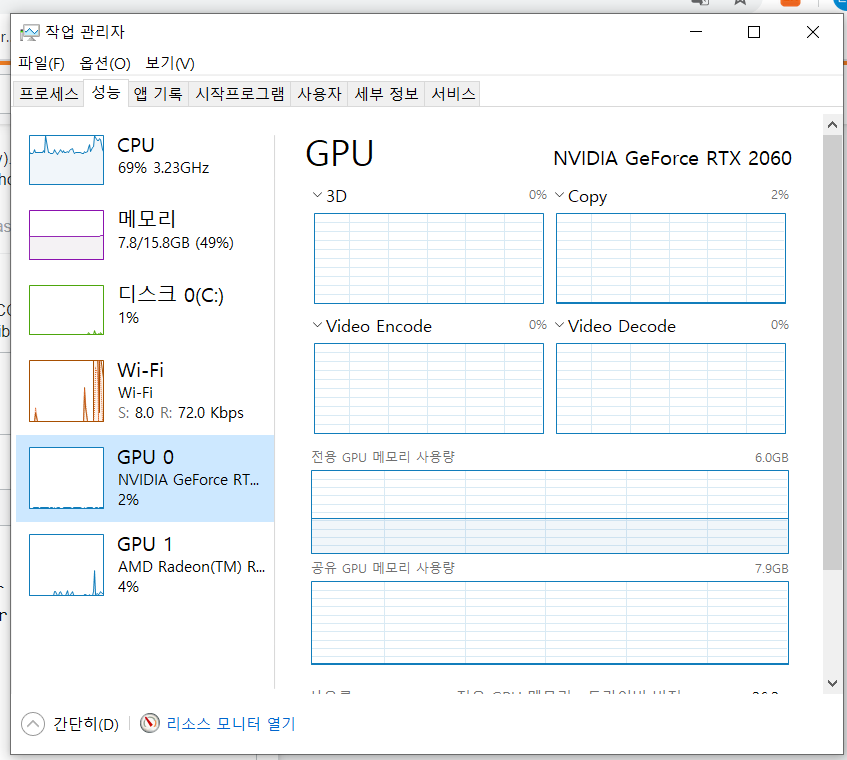

How to reduce the memory requirement for a GPU pytorch training process? (finally solved by using multiple GPUs) - vision - PyTorch Forums

Multi-GPU training. Example using two GPUs, but scalable to all GPUs... | Download Scientific Diagram